Using outcomes of transformative experiences (TE) for enhancing advertising effectiveness

Measuring TE ad performance in comparison to other ads Previous research identified research gaps in enticing potential consumers interested in transformation through appropriate marketing materials as well as in measuring ad-evoked emotions in the context of tourism marketing. The quantitative online study aimed to understand how an ad in which outcomes of TE were included […]

Openly collaborative, organized, and functional service process ecosystem paving the way to success in Finnish medical tourism

Keywords: medical tourism, ecosystem, tourism supply chain, tourism service process, service process ecosystem, collaboration in tourism. Medical tourism- tourism or just a health care intervention abroad? Medical tourism is originally derived from health tourism that include travelling outside one’s native health care administrative region for the reconstruction or strengthening of personal health by the means […]

Research: Adaptation and resilience of the key organizations in the Lappeenranta region after losing their main international customer segment

Introduction of the study The aim of this master’s thesis was to investigate how organizations in the Lappeenranta region have managed the recent crisis of the COVID-19 pandemic and the conflict between Russia and Ukraine. Both of these crises have tested the resilience of the destination and its organizations as they have caused the loss […]

How overtourism could be prevented via green digital advertising?

Have you visited a destination that has “lost its magic” due to the crowds there? Authenticity as well as the quality of life of residents has been in danger since overtourism started to eat capacities of the destinations. Overtourism is referred to as “loving places to death”². Three decades ago, Venice was home to over 120 […]

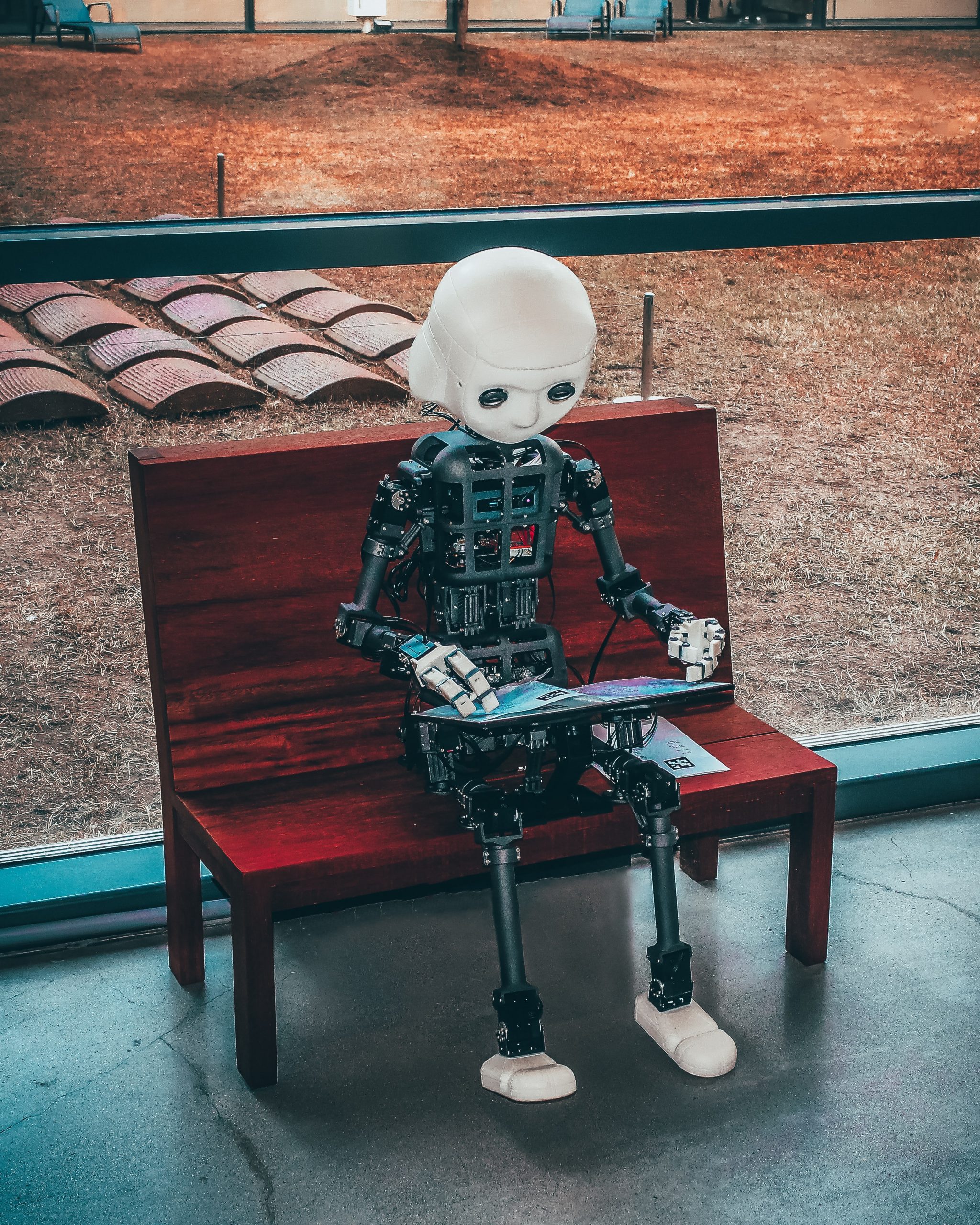

Does Virtual Reality (VR) Travel have the potential to be more?

Does Virtual Reality (VR) Travel have the potential to be more? Retrieved from Forbes.com Due to the pandemic, the global tourism industry had to come to a sudden halt. Even as lockdowns were slowly lifted, travelers were skeptical, and the threat of the virus is still very real. Recently, a second wave has swept across […]

Enhancing customer experience with smart hotel technologies

Have you checked into a hotel using a mobile app? Or maybe the light in your hotel room switched off automatically when you opened the door to leave? These features are only the tip of the iceberg in smart hotels. What are smart hotels? According to Dalgic and Birdir 1, a smart hotel adopts a […]

How digital nomads are shaping the travel industry?

How are digital nomads shaping the travel industry? Who are digital nomads? Technology is enabling a new, dynamic remote workforce called Digital Nomads. Digital Nomads are a population of independent workers who choose to embrace a location-independent, technology-enabled lifestyle that allows them to travel and work remotely, anywhere in the world. Our research finds that […]

How to Improve Online Presence of Small Tourism Businesses?

Online information search is a crucial and often overlooked part of today’s consumers’ decision-making, and most of it is done through search engines or social media. The searches on search engines and social media platforms correlate with the visits in destinations[1]. Here I have a look at different research papers considering these subjects, in order […]

How content producers can use AI in digital marketing

Do you want to use AI to create better content? There’s no doubt that content production is getting more complex every day. It’s getting hard to get noticed on social media. Your inbox is packed with repetitive messages, and you must fight against a massive crowd to get people to read your content. And then […]

How can AR (Augmented Reality) be used to improve the customer experiences in Spas?

The tourism industry is changing significantly as information technology is becoming more and more part of it. Information technology has a critical influence on making tourism businesses more competitive, as it provides the tools that a tourism business needs to improve their marketing and management (Buhalis, O´Connor, 2005.). Information technology has changed the way tourism businesses […]